Join The Newsletter

Get actionable tips and insights on AI, Cyber and Leadership to become resilient in the world of AI

- Dec 18, 2025

Predictive Cyber Measures with AI and Update on Cybersecurity Adoption Lifecycle Model

Read Time: 10 Minutes

Read on: monicatalkscyber.com

Read previous newsletter editions

***

This blog is an update on the cybersecurity adoption lifecycle model published in 2020: Excerpt from 2020, Detailed 2020 Model on Dark Reading

***

🤯 We have been (and most of us still are) doing cybersecurity maturity all wrong. This is the hill I'll die on. Here's why.

Over the last 5 years, resilience has undoubtedly become one of the biggest focuses of almost every professional, every business, and every organisation out there. While, we started talking about ‘resilience’ over the last two decades or so, the concept and implementation of ‘cyber resilience’ for businesses didn’t really hit the mainstream market, until the financial crisis of 2008. It particularly gained public traction only after Covid-19 became a global health crisis exposing our supply chains as a part of a complex, intertwined and vulnerable global digital world, pushing further for the real need for resilience in our global infrastructure across all industries and businesses.

I still remember, in early 2000s when I was talking about “cyber warfare” and “cyber resilience”, it felt more like a theoretical concept to many organisations. I was even told by a company that I was not allowed to use the word “cyber warfare”. This was after Stuxnet hit and showcased that the reality was completely opposite. Stuxnet gave a face to cyberwarfare, publicly.

Yet most companies were in denial.

Global supply chains, massive digitalisation, the global health crisis and more recently emerging technologies like AI, all of which have accelerated the change and the need for resilience and adaptability.

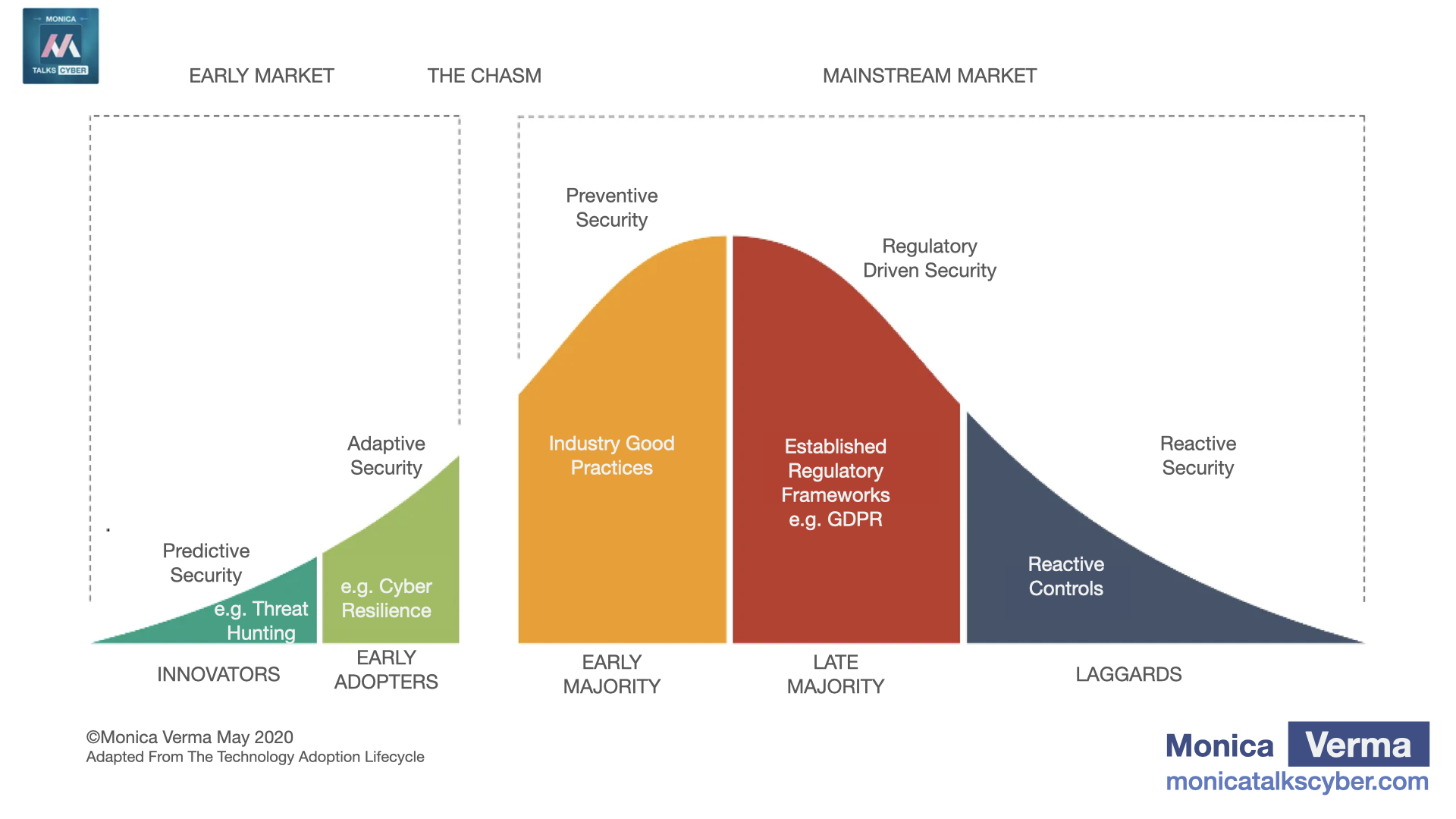

Figure 1: 2020 Cybersecurity Adoption Lifecycle Model

(See below for 2025 Updated Model)

That’s why I built this above model in 2020, that got published 5 years ago to help companies and businesses understand how cybersecurity needed to evolve in order to continue to support the businesses and serve the society. In that model I showcased how companies should be thinking and implementing cyber maturity, not in terms of how automated your process is but how your organisation manages the "unknowns". That's where adaptive and predictive security measures come into the picture. In a nutshell, in that model, I showcased that cybersecurity adoption in the market needed to evolve and both adaptive and predictive security measures would (need to) become mainstream and good practice over the coming years.

Why is this important, why is it still relevant today and how do we solve this?

In this blog, I’ll cover

The key highlights from that model

What it "predicted" in terms of how cybersecurity needs to evolve,

Why is this important and even more relevant in today’s world of AI

What is the outlook for the model in terms of adaptive and predictive security

How to better defend against the future of AI and cyberattacks to become resilient in the world of AI.

First, let’s look at the problem.

The Problem

It’s no surprise to anyone that most of the last decade has been driven by uncertainties and the unknowns. There are at least three types of unknowns, typically:

The unknown knowns (i.e. tacit knowledge) e.g. the risks we mitigated based on our experience or intuition but without complete knowledge of attribution or the entire kill chain.

The known unknowns (i.e. the ignorance we are aware of) e.g. the advanced persistent threats (APTs), the supply chain vulnerabilities, the insider threats, etc. we know they exist but don’t know when they’ll be exploited or they’ll materialise.

The unknown unknowns (i.e. the meta-ignorance) e.g. the AI and cyber threats and risks that we don't even know we don't know. Since, we don’t know what we don’t know, it’s even hard to exemplify it before the fact. These are the most dangerous.

The hardest one is the unknown unknowns. This meta ignorance was triggered, for example, during the global pandemic, then by the global geopolitical crisis (Ukraine-Russia warfare) and recently by AI. To add to that, with AI, we have one more aspect to it: unpredictability. That’s a massive one and let’s unfold it.

The biggest challenge with AI isn’t an army of AI-robots taking over humanity. It’s AI (being used in) hacking humans and businesses in ways that what we don’t know we don’t know. It’s AI going rogue in ways we don’t know we don’t know. Ultimately, it’s the meta-ignorance around AI risks.

There have been myriad cases pointing towards how AI unknowingly, unintentionally yet very much so heavily influenced human decision making leading to dire or irrecoverable consequences. Look at the example of the parents of a 16-year old who took his own life have sued OpenAI because allegedly ChatGPT drove the kid to suicide, or this another example of this old man who passed away because he was influenced by an AI chatbot into believing he had a real girlfriend in New York, so he left his wife to go visit his “girlfriend” for one last time.

In my keynote on bridging the AI and cyber leadership paradox that I gave in Canada in September 2025, I talked about the concept of Autonomous AI hacking (btw, way before Claude released their paper on AI-orchestrated cyberattacks in November 2025) and why we need to move the needle forward towards adaptive and predictive security, something that I originally talked about in my model in 2020.

You can watch my keynote here.

Video 1: Monica's Keynote in Canada on Adaptive and Predictive Security Bridging the AI and Cyber Gap (Sept '25)

Additionally, what I talked about in that keynote was the fact the we will soon come to the point where agentic AI with some level of autonomy in decision making and actions will be able to attack other agentic AI or infrastructure or you, with little to no human intervention, e.g. my AI-assistant hacking your AI-assistant to carry out a task based on a decision it is made to believe without you or me even knowing about it, until it’s too late. This is also a specific example combining of AI going rogue and hacking autonomously.

That unpredictability combined with our meta-ignorance (the unknown unknowns) is my biggest worry.

Now the next question is how do we fight that?

— TLDR—

If you don't want to read the entire blog, here's my short answer (Obviously it misses all the details, and the devil is in the details, but here you go):

We fight that by using and becoming better at not only building adaptive security measures but more importantly predictive security measures with AI. For example,

Using AI to hunt threats (in real-time) at speed and scale

Using AI to better map your threat landscape

Using AI "predict" threats in real-time through behavioural analysis and suspicious pre-activities and

Using predictive analysis to putting measures in place before it explodes.

Here's why I believe this is the future.

I am a big fan of threat modeling. Even before I officially worked as a hacker, more than 20 years ago, I wrote my Master Thesis on Attacker-Centric Web Application Threat Modeling, for which I received the best master thesis award. Since then I have led, built and implemented not only cyber risk management but enterprise risk across finance, healthcare and other critical infrastructure space with three key elements:

a) Threat landscape integrated into the risk management framework, especially for cyber

b) Cyber / Tech risk integrated into enterprise risk management

c) Threat modeling truly been the backbone of security engineering

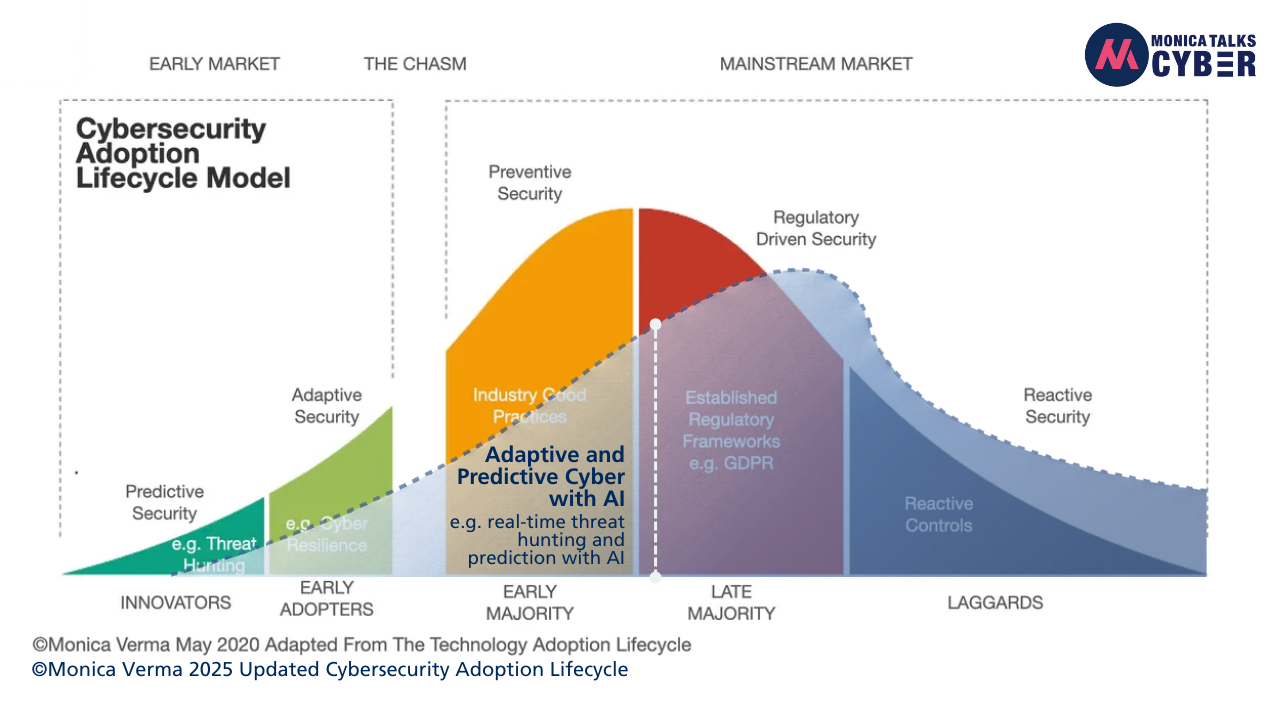

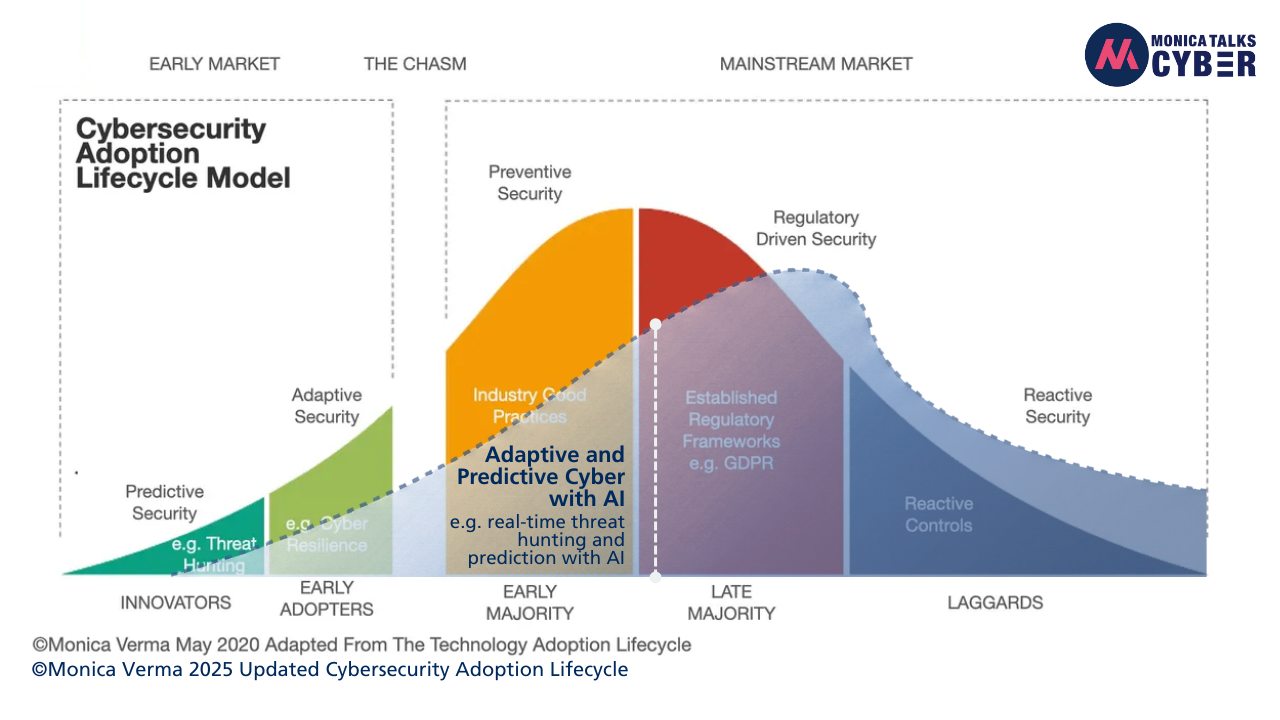

AI will not only change threat research, but also threat hunting, real-time vulnerability patching and evidence-based risk management, to name just a few examples of predictive security with AI. Here's my updated 2025 version of this model:

Figure 2: 2025 Updated Cybersecurity Adoption Lifecycle Model

Now, let’s dive into the details below.

***

Setting the Foundation

Here’s where most maturity models fall short. We have been (and most companies still are) doing cybersecurity maturity all wrong. This is the hill I'll die on.

Most maturity models are around how well the process is defined, managed, automated, etc. I get it, automation matters, especially with AI, both in terms of data processing with speed and scale, which is great. But that maturity framework doesn’t take into account a lot of things. Most companies put in measures only to address known threats and risks. Then they wonder why they weren’t ready when $h%t hit the fan or when despite most of the vulnerabilities they patched they still got breached because they never catered for the risks they didn’t have in their risk register against the threats they didn’t know about, and that "unknown" risk materialised and became a full-blown crisis.

Here’s why most companies are lost when it comes to cyber maturity and defending their businesses against the threats and cyberattacks:

Making a bad process highly defined, managed and automated doesn’t make it any better. Bad processes, when automated are just even worse processes, that are now everywhere and churning incorrect output faster, not better. Unless, you fix the process, making it faster, automated and scalable, won’t make it any better.

-

If regulations are the only reason why you do what you do, you’ll never be truly resilient. As I wrote back then in 2020 in my published article:

In addition to preparation and recovery, one of the key success factors in building a strong cyber-resilience framework is adaptability and predictability aka adaptability to an ever-evolving threat landscape and predictability of the unknowns.

There is no 100% security, we know that. Add to that AI and unpredictability. In this insecure, uncertain and unpredictable world of AI, maturity isn’t about preventing or even just protecting, it’s about adapting and predicting (and here's the key: with a certain degree of probability) the threats and the risks of the unknowns (or maybe just unknown to your organisation). It’ll never be 100%. We are not aiming for 100% anything. That’s why preventive measures alone will never work, even if you bake them into regulations.

In order to continue experimenting with AI, we need to look at cyber maturity very differently. AI will break things. It's not some automated process that'll fix it. It's the extent of adaptability and the level of predictably that will determine the actual impact of the unintended consequences and how we will manage that impact.

Looking at security maturity as just an ITIL process that's well defined, managed, automated, etc. takes out of the equation all the key stakeholders that you truly need to make an organisation mature. It's not just IT. It's operations. It's product teams. It's architecture. It's engineering. It's HR. Looking at maturity differently and increasing it will require all of them to be involved and rightly so. Just not an IT process that's automated.

Why It is Still Highly (and Maybe Even More) Relevant Today

You can read my previous full article on my 2020 cybersecurity adoption lifecycle here but here are the key things my model showcases and especially how it still is and has become even more relevant in the world of AI:

-

Security maturity isn’t about processes, or at least shouldn’t be. As I said earlier, a highly-automated process that’s crap is just a crap process now operating at massive speed across a massive scale.

As the model shows, for organisations to truly mature in the digital world, and now in the AI world, they must cross the chasm from preventive security to adaptive and predictive security. One such example of adaptive security is policies being activated in real-time during an ongoing crisis to minimise the damage to your crown jewels or to your Minimum Viable Business (MVB).

Today, some companies either have or are in the process of crossing that chasm with adaptive security. But many haven't even started.

Resilience and adaptive security is becoming mainstream in the market and the key focus of many organisations. However, certainly, not every organisation is at the same maturity level, even when it comes to adaptive security.

For example, finance sector is much further than others due the nature of it’s global impact to economy, with cyber resilience or adaptive security as a key part of their operational and business resilience.

Add to that major regulatory frameworks like NIS2 and DORA, that came into effect after my model was published, and now mandate resilience capabilities as a key part of their business. In fact, they are required to test it and report on it to the authorities.

-

Minimum Viable Business (MVB) is a concept being driven in most critical infrastructure companies. I know, as I have helped many organisations build resilience and adaptive security for their critical infrastructure / MVB to defend against cyberattacks and crisis. Building resilience “at scale” is no longer optional but mandatory for every organisation.

-

Having said that, adaptive security is not enough. I said that 5 years ago. My model showcased that the next wave of cybersecurity adoption in the market and maturity in the organisations won’t just be adaptability but predictive security e.g. predicting and defending against threats in real-time, e.g. threat hunting in real-time, etc. It was true back then in terms of where we were heading. It’s truer today, especially with AI. Let me explain.

For me, "Autonomous AI Hacking" is when AI autonomously attacks organisations, infrastructure or even other AI systems with little to no human intervention. I have spoken about this concept extensively in many of my keynotes, notable in this keynote here in Sept 2025 (prior to Anthropic's report).

Autonomous AI hacking (without human intervention) isn’t something we are going to get out-of-the-box today, despite Anthropic’s alleged claims that China has been “abusing” Claude for conducting AI-orchestrated cyberattacks with 80%-90% autonomy (which isn't entirely accurate). As you already must have read, recently, a report came out by Anthropic claiming hackers sponsored by China are carrying out automated AI-based cyberattacks to autonomously compromise chosen targets with little human involvement. According to the report, this is the "first reported AI-orchestrated cyber espionage campaign". Even though it was not really autonomous in its entirety, at least not to the degree it was claimed, I truly believe this will eventually happen in real-world scenarios and at large-scale.

It’s not so much about the sophistication. Autonomous AI hacks do not necessarily need to be complex or sophisticated. Obviously, agentic AI carrying out the tasks with some level of autonomy is an advancement in itself, but the attack itself doesn't need to be sophisticated. They can but they don't have to. The key is that AI is able to, with little to no human intervention, carry out tactical and chained tasks throughout e.g. a cyber kill chain to execute the attack and achieve its malicious goal, whatever that is. It more than just automating cyberattacks in speed and at scale. Surely, more sophisticated attacks will require some human intervention, but AI is already automating few key parts of the entire cyber kill chain including not only vulnerability discovery in real-time, but creating exploits.

-

I am a big fan of threat modeling. Even before I officially worked as a hacker, more than 20 years ago, I wrote my Master Thesis on Attacker-Centric Web Application Threat Modeling, for which I received the best master thesis award. Threat modeling has truly been the backbone of security engineering. AI will not only change threat research, but also threat hunting, real-time vulnerability patching and evidence-based risk management, to name just a few examples of predictive security with AI.

We need AI to defend against the darker side of AI. It is this very AI that we will need to not only hunt for threats in real-time, correlate them in terms of impact on business risks, find vulnerabilities, and patch them in real-time to defend against (semi-)autonomous AI cyberattacks.

-

It's important to differentiate AI-powered cyberattacks and Autonomous AI Hacking.

Here's how I define and distinguish them. Attacks like deepfakes, personalised phishing, etc. are AI-powered. We use AI e.g GAN (Generative Adversarial Network) as the foundation for creating deepfakes that look extremely real, or we use AI to generate highly-personalised and extremely convincing phishing email that may as well be sent automatically but we are doing it. When it comes to Autonomous AI Hacking, it’s about the end-to-end autonomy of carrying out chained tasks in an entire cyber kill chain or most parts of it using AI for not only generating “tokens” or code, but creating workflows, analysing input, making certain decisions, based on that taking certain actions to carry out the next step in the kill chain, with little to no human intervention, ultimately achieving it "malicious" goal.

In addition to preparation and recovery, one of the key success factors in building a strong cyber-resilience framework is adaptability and predictability, adaptability to an ever-evolving threat landscape and predictability of the unknowns.

-

In the world of AI, where AI-orchestrated cyber attacks, and autonomous-AI hacking will eventually become mainstream, predictive security is going to be even more important than before. I started talking about autonomous AI hacking for a while now, notably, recently in my keynote “The Leadership Paradox: Bridging the AI and Cyber Gap” in Canada, way before the Anthropic paper came out. Now, I get it, that research paper had many flaws, including but not limited to the fact that while they claimed it to be 80%-90% automated, human intervention was critical for review, verification, launch, etc. But here’s what I talked about in my keynote in Canada and here’s why autonomous AI hacking is the future we need to be ready to defend: Even if there is no true autonomous AI hacking today, and even the AI-orchestrated cyberattack research by Anthropic required a lot human intervention, and manual verifications and approvals, we are already at a point where say e.g. my AI-assistant hacking your AI-assistant to carry out a task based on a decision it is made to believe without you even knowing about it, until it’s too late.

To ensure we're not lagging, it's not enough to be proactive, we need to be adaptive and predictive.

Based on the above, here's my updated 2025 version of this model:

There is a foundational element though that's needed. None of the above can happen without good quality of data e.g. real-time telemetry, pre-training data set, behavioural analytics, etc. and without AI becoming better and better at "predicting" with even higher degree of probability and much less hallucinations.

AI is as good as its underlying data, because as you know, Garbage In Garbage Out (GIGO) is highly relevant when it comes to AI models. This is extremely important when it comes to both adaptive and predictive security. (Semi-)autonomous "decision" making only works well, when the data is the “right” data e.g. it is complete, it’s inclusive, it is accurate, etc.

Adaptability and Predicting The Future

Here's the underlying message. For the last decade and especially since I released my model 5 years ago, I have been advocating that adaptive and predictive security is the future of cybersecurity. I stand by it.

As per the 2020 model, most organisations and businesses are in the mainstream cybersecurity market, that is, in the preventive security and regulatory-driven security fields. There are very few that build and truly implement cybersecurity to advance society and serve as a business differentiator. This requires investing and working in the fields of adaptive security and even predictive security.

Now with AI, I have an even higher degree of confidence that it will be the case. I use the word “predicting” somewhat loosely, because there is no 100% predictability, but we can shape the outcomes we want based on the actions we take today. What I built 5 years ago, I believe, is even more true and relevant today and will continue to shape the cybersecurity industry. I still believe, we are crossing the chasm with adaptive and predictive security and will continue to do so. That shift towards adaptive and predictive security is needed both in the adoption in the mainstream market and in organisations making it as a part of their maturity equation.

–– Monica Verma

Follow me on Linkedin, Youtube, Instagram or Book a 1:1 Call

Join The Newsletter

Get actionable tips and insights on AI, Cyber and Leadership to become resilient in the world of AI